A useful little device

1st October 2011Last weekend, I ran into quite a lot of bother with my wired broadband service. Eventually, after a few phone calls to my provider, it was traced to my local telephone exchange and took another few days before it finally got sorted. Before that, a new ADSL filter (from a nearby branch of Maplin as it happened) was needed because the old one didn’t work with my phone. Without that, it wouldn’t have been possible to debug what was happening with the broadband clashing with my phone with the way that I set up things. Resetting the router was next and then there was a password change before the exchange was blamed. After all that, connectivity is back again and I even upgraded in the middle of it all. Downloads are faster and television viewing is a lot, lot smoother too. Having seen fairly decent customer service throughout all this, I am planning to stick with my provider for a while longer too.

Of course, this outage could have left me disconnected from the Internet but for the rise of mobile broadband. Working off dongles is all very fine until coverage lets you down and that seems to be my experience with Vodafone at the moment. Another fly in the ointment was my having a locked down work laptop that didn’t entertain such the software installation that is needed for running these things, a not unexpected state of affairs though it is possible to connect over wired and wireless networks using VPN. With my needing to work from home on Monday, I really had to get that computer online. Saturday evening saw me getting my Toshiba laptop online using mobile broadband and then setting up an ad hoc network using Windows 7 to hook up the work laptop. To my relief, that did the trick but the next day saw me come across another option in Argos (the range of computing kit in there still continues to surprise me) that made life even easier.

While seeing if it was possible to connect a wired or wireless router to mobile broadband, I came across devices that both connected via the 3G network and acted as wireless routers too. Vodafone have an interesting option into which you can plug a standard mobile broadband dongle for the required functionality. For a while now, 3 has had its Mifi with the ability to connect to the mobile network and relay Wi-Fi signals too. Though it pioneered this as far as I know, others are following their lead with T-Mobile offering something similar: its Wireless Pointer. Unsurprisingly, Vodafone has its own too though I didn’t find and mention of mobile Wi-Fi on the O2 website.

That trip into Argos resulted in a return home to find out more about the latter device before making a purchase. Having had a broadly positive experience of T-Mobile’s network coverage, I was willing to go with it as long as it didn’t need a dongle. The T-Mobile one that I have seems not to be working properly so I needed to make sure that wasn’t going to be a problem before I spent any money. When I brought home the Wireless Pointer, I swapped the SIM card from the dongle to get going without too much to do. Thankfully, the Wi-Fi is secured using WPA2 and the documentation tells you where to get the entry key. Having things secured like this means that someone cannot fritter away your monthly allowance too and that’s as important for PAYG customers (like me) as much as those with a contract. Of course, eavesdropping is another possibility that is made more difficult too. So far, I have stuck with using it while plugged in to an electrical socket (USB computer connections are possible as well) but I need to check on the battery life too. Up to five devices can be connected by Wi-Fi and I can vouch that working with two connected devices is more than a possibility. My main PC has acquired a Belkin Wi-Fi dongle in order to use the Wireless Pointer too and that has worked very well too. In fact, I found that connectivity was independent of what operating system I used: Linux Mint, Ubuntu, Windows XP and Windows 7 all connected without any bother. The gadget fits in the palm of my hand too so it hardly can be called large but it does what it sets out to do and I have been glad to have it so far.

Best left until later in the year?

26th January 2010In the middle of last year, my home computing experience was one of feeling displaced. A combination of a stupid accident and a power outage had rendered my main PC unusable. What followed was an enforced upgrade that use combination that was familiar to me: Gigabyte motherboard, AMD CPU and Crucial memory. However, assembling that lot and attaching components from the old system from the old system resulted in the sound of whirring fans but nothing appearing on-screen. Not having useful beeps to guide me meant that it was a case of undertaking educated guesswork until the motherboard was found to be at fault. In a situation like this, a deeper knowledge of electronics would have been handy and might have saved me money too. As for the motherboard, it is hard to say whether it was a faulty set from the outset or whether there was a mishap along the way, either due ineptitude with static or incompatibility with a power supply. What really tells the tale on the mainboard was the fact that all of the other components are working well in other circumstances, even that old power supply.

A few years back, I had another experience with a problematic motherboard, an Asus this time, that ate CPU’s and damaged a hard drive before I stabilised things. That was another upgrade attempted in the first half of the year. My first round of PC building was in the third quarter of 1998 and that went smoothly once I realised that a new case was needed. Similarly, another PC rebuild around the same time of year in 2005 was equally painless. Based on these experiences, I should not be blamed for waiting until later in the year before doing another rebuild, preferably a planned one rather than an emergency.

Of course, there may be another factor involved too. The hint was a non-working Sony DVD writer that was acquired early last year when it really was obvious that we were in the middle of a downturn. Could older unsold inventory be a contributor? Well, it fits in with seeing poor results twice, In addition, it would certainly tally with a problematical PC rebuild in 2002 following the end of the Dot Com bubble and after the deadly Al Qaeda attack on New York’s World Trade Centre. An IBM hard drive that was acquired may not have been the best example of the bunch and the same comment could apply to the Asus motherboard. The resulting construction may have been limping but it was working and I tolerated.

In contrast, last year’s episode had me launched into using a Toshiba laptop and a spare older PC for my needs with an external hard drive enclosure used to extract my data onto other external hard drives to keep me going. It felt a precarious arrangement but it was a useful experience in ways too. There was cause for making acquaintance with nearby PC component stores that I hadn’t visited before and I got to learning about things that otherwise wouldn’t have come my way. Using an external hard drive enclosure for accessing data on hard drives from a non-functioning PC is one of these. Discovering that it is possible to boot from external optical and hard disk drives came as a surprise too and will work so long as there is motherboard support for it. Another experience came from a crisis of confidence that had me acquiring a bare-bones system from Novatech and populating it with optical and hard disk drives. Then, I discovered that I have no need for power supplies rated more than 300 watts (around 200 W suffices). Turning my PC off more often became a habit friendly both to the planet and to household running costs too. Then, there’s the beneficial practice of shopping locally and it can suffice even if what PC magazines stick on their hot lists but shopping online for those pieces doesn’t guarantee success either. All of these were useful lessons and, while I’d rather not throw away good money after bad, it goes to show that even unsuccessful acquisitions had something to offer in the form of learning opportunities. Whether you consider that is worthwhile is up to you.

A self-hosted online photo album option

16th July 2009I was perusing a recent copy of Linux Format and encountered a feature describing a self-hosted alternative to the likes of Flickr: Gallery. From my quick look, it looks fully featured, offering themes and even shopping cart facilities for those who want to sell their wares. The screenshots on the open-source project’s website look promising but, for a fuller appraisal, I would need to spend some time trying to bend it to my will. Before anyone mentions it, I am aware that WordPress can be used for photoblogging, but this tool seems to take things a bit further. It’s the sort of thing about which I might have wondered, given the pervasiveness of content management systems these days. My own custom-built photo gallery is devoid of a slick back end, hence why Gallery caught my eye, but I’ll continue with it and may even get to adding the needful myself.

Never undercutting the reseller…

23rd October 2009Quite possibly, THE big technology news of the week has been the launch of Windows 7. Regular readers may be aware that I have been having a play with the beta and release candidate versions of the thing since the start of the year. In summary, I have found to work both well and unobtrusively. There have been some rough edges when access files through VirtualBox’s means of accessing the host file system from a VM but that’s the only perturbation to be reported and, even then, it only seemed to affect my use of Photoshop Elements.

Therefore, I had it in mind to get my hands on a copy of the final release after it came out. Of course, there was the option of pre-ordering but that isn’t for everyone so there are others. A trip down to the local branch of PC World will allow you to satisfy your needs with full, upgrade (if you already have a copy of XP or Vista, it might be worth trying out the Windows Secrets double installation trick to get it loaded on a clean system) and family packs. The last of these is very tempting: three Home Premium licences for around £130. Wandering around to your local PC components emporium is an alternative but you have to remember that OEM versions of the operating system are locked to the first (self-built) system on which they are installed. Apart from that restriction, the good value compared with retail editions makes them worth considering. The last option that I wish to bring to your attention is buying directly from Microsoft themselves. You would think that this may be cheaper than going to a reseller but that’s not the case with the Family Pack costing around £150 in comparison to PC World’s pricing and it doesn’t end there. That they only accept Maestro debit cards along with credit cards from the likes of Visa and Mastercard perhaps is another sign that Microsoft are new to whole idea of selling online. In contrast, Tesco is no stranger to online selling but they have Windows 7 on offer though they aren’t noted for computer sales; PC World may be forgiven for wondering what that means but who would buy an operating system along with their groceries? I suppose that the answer to that would be that people who are accustomed to delivering one’s essentials at a convenient time should be able to do the same with computer goods too. That convenience of timing is another feature of downloading an OS from the web and many a Linux fan should know what that means. Microsoft may have discovered this of late but that’s better than never.

Because of my positive experience with the pre-release variants of Windows 7, I am very tempted to get my hands on the commercial release. Because I have until early next year with the release candidate and XP works sufficiently well (it ultimately has given Vista something of a soaking), I’ll be able to bide my time. When I do make the jump, it’ll probably be Home Premium that I’ll choose because it seems difficult to justify the extra cost of Professional. It was different in the days of XP when its Professional edition did have something to offer technically minded home users like me. With 7, XP Mode might be a draw but with virtualisation packages like VirtualBox available for no cost, it’s hard to justify spending extra. In any case, I have Vista Home Premium loaded on my Toshiba laptop and that seems to work fine, in spite of all the bad press that Vista has gotten for itself.

Blogging with Word 2007

1st February 2007It seems strange to say it but I am making good use of Word’s blogging capabilities. Having had WordPress.com’s blog editor mangle one of my posts – incidentally while using Opera as my browser -- is the cause of this turn of events.

When setting up new accounts, there are a number of presets available to be used to work with major blogging providers such as Blogger, WordPress, and TypePad. This is not all though as it is possible to hook up to other blogs in a more generic fashion. In fact, I have able to hook up to my other WordPress-powered blog; hosted on the same server as my personal website and with all of the associated programming and scripting handled by myself. Where you have a number of accounts set up in the application, a drop-down menu appears in the post so that you can select the account to be used.

Speaking of drop down menus embedded in the post, you can add categories to a post from the blog server’s own collection and you can have more than one in any post. This feature is a boon as is the ability to edit posts that are already on there but Word only seems to show a subset of all the posts on the server, about 20 I think, rather than every single one. Another caveat is that you need to use a separate window for each post or you’ll end up overwriting posts in error. Whether this is a result of RSS feed settings or is intrinsic to Word itself remains something that I have yet to discern. As it is Word, formatting, insertion of objects such as hyperlinks and images is very much part of the package. That said, uploading images via this route was not something that I tested until I was writing this post but it seems to work well.

Apart from the irritations discussed above, I did find Word crashing a few times but no data were lost thanks to its seemingly excellent file recovery capabilities, a definite counterpoint to some of my experiences with Word’s file recovery feature in previous versions. Eventually, the Office Diagnostics tools kicked in to see if all was well and, after carrying out both hardware (memory, hard drive, etc.) and software checks, an installation repair was performed. Let’s see if this resolves the issue. Even so, the crash repair and diagnostics were not something that I had seen to the same extent in previous versions of Office and they did look pretty impressive.

In summary, Word does seem to be good blogging tool but I wouldn’t use it on its own because of its inability to download a full list of posts for editing. A blog’s own interface will remain necessary for that. Also, Word is far from being the only “offline” blog editor out there and I am tempted to take a look at the likes of BlogJet and w.bloggar.

Forcing Ubuntu (and Debian) to upgrade to a newer distribution version

8th October 2008

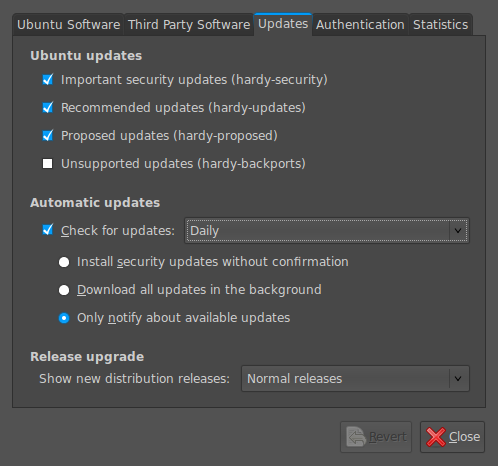

Ubuntu is usually good at highlighting the existence of a new version of the distribution through its Update Manager. That means that 8.10 should be made available to you at the end of the month so long as you have sorted the relevant setting for 8.04 to realise what has happened. That lives in System > Administration > Software Sources > Updates. If you haven’t done that, then 8.04 will continue regardless since it is a long term supported release.

Otherwise, it’s over to the command line to sort you out. One of the ones below will do with the first just carrying out a check for a new stable version of Ubuntu and the second going all the way:

sudo update-manager -c

sudo update-manager -p

if you are feeling more adventurous, you can always try the development version and this checks for one of those (I successfully used this to try out the beta release of Intrepid Ibex from within a Wubi instance on my laptop):

sudo update-manager -d

Neither of the above are available on Debian so they seem to be Ubuntu enhancements. That is not to say that you cannot force the issue with Debian; it’s just that the more generic variant is used and, unless, you have gone fiddling with visudo, you will need to run this as root (it works in Ubuntu too):

update-manager --dist-upgrade

Compressing a VirtualBox VDI file for a Linux guest

6th June 2016In a previous posting, I talked about compressing a virtual hard disk for a Windows guest system running in VirtualBox on a Linux system. Since then, I have needed to do the same for a Linux guest following some housekeeping. The Linux distribution used is Debian so the instructions are relevant to that and maybe its derivatives such as Ubuntu, Linux Mint and their kind.

While there are other alternatives like dd, I am going to stick with a utility named zerofree to overwrite the newly freed up disk space with zeroes to aid compression later on in the process for this and the first step is to install it using the following command:

apt-get install zerofree

Once that has been completed, the next step is to unmount the relevant disk partition. Luckily for me, what I needed to compress was an area that I reserved for synchronisation with Dropbox. If it was the root area where the operating system files are kept, a live distro would be needed instead. In any event, the required command takes the following form with the mount point being whatever it is on your system (/home, for instance):

sudo umount [mount point]

With the disk partition unmounted, zerofree can be run by issuing a command that looks like this:

zerofree -v /dev/sdxN

Above, the -v switch tells zerofree to display its progress and a continually updating percentage count tells you how it is going. The /dev/sdxN piece is generic with the x corresponding to the letter assigned to the disk on which the partition resides (a, b, c or whatever) and the N is the partition number (1, 2, 3 or whatever; before GPT, the maximum was 4). Putting all this together, we get an example like /dev/sdb2.

Once, that had completed, the next step is to shut down the VM and execute a command like the following on the host Linux system ([file location/file name] needs to be replaced with whatever applies on your system):

VBoxManage modifyhd [file location/file name].vdi --compact

With the zero filling in place, there was a lot of space released when I tried this. While it would be nice for dynamic virtual disks to reduce in size automatically, I accept that there may be data integrity risks with those so the manual process will suffice for now. It has not been needed that often anyway.

Performing parallel processing in Perl scripting with the Parallel::ForkManager module

30th September 2019In a previous post, I described how to add Perl modules in Linux Mint while mentioning that I hoped to add another that discusses the use of the Parallel::ForkManager module. This is that second post and I am going to keep things as simple and generic as they can be. There are other articles like one on the Perl Maven website that go into more detail.

The first thing to do is ensure that the Parallel::ForkManager module is called by your script and having the following line near the top will do just that. Without this step, the script will not be able to find the required module by itself and errors will be generated.

use Parallel::ForkManager;

Then, the maximum number of threads needs to be specified. While that can be achieved using a simple variable declaration, the following line reads this from the command used to invoke the script. It even tells a forgetful user what they need to do in its own terse manner. Here $0 is the name of the script and N is the number of threads. Not all these threads will get used and processing capacity will limit how many actually are in use so there is less chance of overwhelming a CPU.

my $forks = shift or die "Usage: $0 N\n";

Once the maximum number of available threads is known, the next step is to instantiate the Parallel::ForkManager object as follows to use these child processes:

my $pm = Parallel::ForkManager->new($forks);

With the Parallel::ForkManager object available, it is now possible to use it as part of a loop. A foreach loop works well though only a single array can be used with hashes being needed when other collections need interrogation. Two extra statements are needed with one to start a child process and another to end it.

foreach $t (@array) {

my $pid = $pm->start and next;

<< Other code to be processed >>

$pm->finish;

}

Since there often is other processing performed by script and it is possible to have multiple threaded loops in one, there needs to be a way of getting the parent process to wait until all the child processes have completed before moving from one step to another in the main script and that is what the following statement does. In short, it adds more control.

$pm->wait_all_children;

To close, there needs to be a comment on the advantages of parallel processing. Modern multi-core processors often get used in single threaded operations and that leaves most of the capacity unused. Utilising this extra power then shortens processing times markedly. To give you an idea of what can be achieved, I had a single script taking around 2.5 minutes to complete in single threaded mode while setting the maximum number of threads to 24 reduced this to just over half a minute while taking up 80% of the processing capacity. This was with an AMD Ryzen 7 2700X CPU with eight cores and a maximum of 16 processor threads. Surprisingly, using 16 as the maximum thread number only used half the processor capacity so it seems to be a matter of performing one’s own measurements when making these decisions.

A collection of lessons learnt about web hosting

28th March 2008Putting this blog back on its feet after a spot of web hosting bother caused me to learnt a bit more about web hosting than I otherwise might have done. Here’s a selection and they are in no particular order:

- Store your passwords securely and where you can find them because you never know how a foul up of your own making can strike. For example, a faux pas with a configuration file is all that’s needed to cause havoc for a database site such as a WordPress blog. After all, nobody’s perfect and your hosting provider may not get you out of trouble as quickly as you might like.

- Get a MySQL database or equivalent as part of your package rather than buying one separately. If your provider allows a trial period, then changing from one package to another could be cheaper and easier than if you bought a separate database and needed to jettison it because you changed from, say, a Windows package to a Linux one or vice versa.

- It might be an idea to avoid a reseller unless the service being offered is something special. Going for the sake of lower cost can be a false economy and it might be better to cut out the middleman altogether and go direct to their provider. Being able to distinguish a reseller from a real web host would be nice but I don’t see that ever becoming a reality; it is hardly in resellers’ interests, after all.

- Should you stick with a provider that takes several days to resolve a serious outage? The previous host of this blog had a major MySQL server outage that lasted for up to three days and seeing that was one of the factors that made me turn tail to go to a more trusted provider that I have used for a number of years. The smoothness of the account creation process might be another point worthy of consideration.

- Sluggish system support really can frustrate, especially if there is no telephone support provided and the online ticketing system seems to take forever to deliver solutions. I would advise strongly that a host who offers a helpline is a much better option than someone who doesn’t. Saying all of that, I think that it’s best to be patient and, when your website is offline, that might not be as easy you’d hope it to be.

- Setting up hosting or changing from one provider to another can take a number of days because of all that needs doing. So, it’s best to allow for this and plan ahead. Account creation can be very quick but setting up the website can take time while domain name transfer can take up to 24 hours.

- It might not take the same amount of time to set up Windows hosting as its Linux equivalent. I don’t know if my experience was typical but I have found that the same provider set up Linux hosting far quicker (within 30 minutes) than it did for a Windows-based package (several hours).

- Be careful what package you select; it can be easy to pick the wrong one depending on how your host’s sight is laid out and what they are promoting at the time.

- You can have a Perl/PHP/MySQL site working on Windows, even with IIS being used in place instead of Apache. The Linux/Apache/Perl/PHP/MySQL approach might still be better, though.

- The Windows option allows for ASP, .Net and other such Microsoft technologies to be used. I have to say that my experience and preference is for open source technologies so Linux is my mainstay but learning about the other side can never hurt from a career point of view. After, I am writing this on a Windows Vista powered laptop to see how the other half live as much as anything else.

- Domains serviced by hosting resellers can be visible to the systems of those from whom they buy their wholesale hosting. This frustrated my initial attempts to move this blog over because I couldn’t get an account set up for technologytales.com because a reseller had it already on the same system. It was only when I got the reseller to delete the account with them that things began to run more smoothly.

- If things are not going as you would like them, getting your account deleted might be easier than you think so don’t procrastinate because you think it a hard thing to do. Of course, it goes without saying that you should back things up beforehand.

Using a BASH command to count the files in a directory

12th March 2024As part of my backup workflow, I maintain a machine running OpenMediaVault that I only power up when backups are to be performed. Typically, this often happens when I have new photography images to load, and I have a NAS that acts as an online backup system. The OpenMediaVault machine is a near-offline counterpart to the NAS for added safety.

Recently, I needed to check on the number of image files in a directory from an SSH session because of a need to create a new repository for 2024. Some files from this year had ended up in the 2023 one, and I needed to be sure that nothing from last year ended in the 2024 folder, or vice versa. Getting a file count from a trusted source was a quick way of doing exactly this.

Due to clumsiness with the NAS, I had to do this using the OpenMediaVault machine. While I could go mounting drives on an interim basis, it was quicker to work from a BASH session. The trick was to use the wc command for counting the lines output by an invocation of the ls command. An example follows:

ls -l | wc -l

The -l (as in l for Lima) switch forces wc to count lines, while the counterpart (same letter) for ls forces it to list the contents in long form, one item per line. Thus, counting the number of lines gets you the count of the number of files. The call to the ls command can be customised to add other things life the number of dot files, but the above was enough for my purposes. When the files in both 2023 directories matched, I was satisfied that all was in order.